Stay in the know

We’ll send you the latest insights and briefings tailored to your needs

As big data meets enforcement, we canvased clients to gauge their stance on deploying analytics to root out misconduct

We canvas legal and compliance teams on their handling of data and assess the current trends

The age of big data is upon us. Advances in data analytics and machine learning offer significant potential for compliance driven monitoring for misconduct. Whether it is monitoring employees, supply chains or customer transactions – there are opportunities for a more efficient and effective compliance function. This will inevitably lead to an increase in regulators’ expectations of businesses’ abilities to monitor, detect and report misconduct. New research shows that while big data features heavily in most organisations’ commercial strategy, those considering its application in monitoring for misconduct are far fewer.

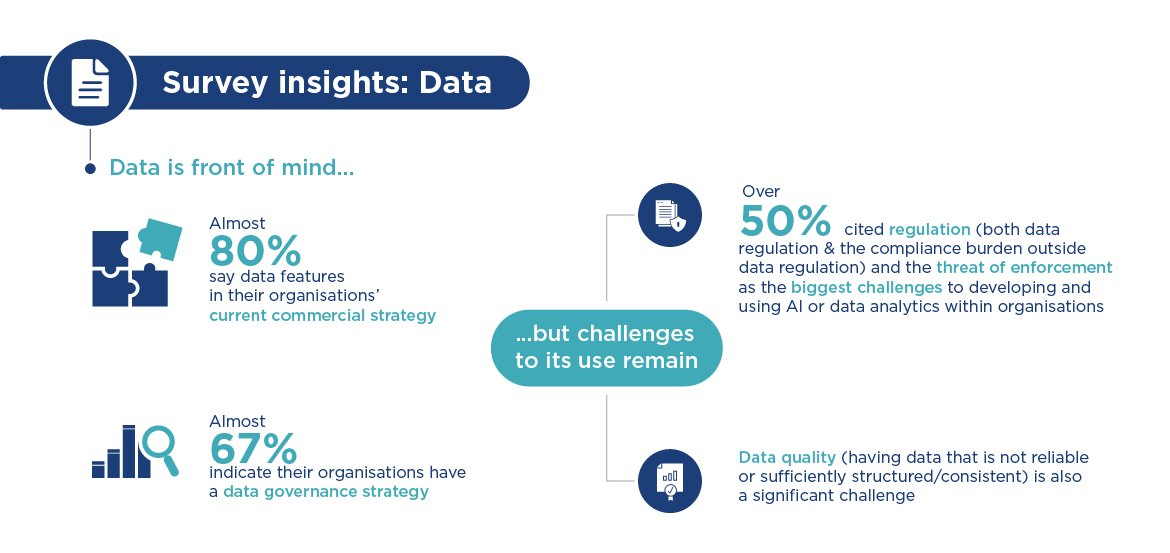

Herbert Smith Freehills surveyed a number of clients in general counsel, senior legal, compliance and risk roles in organisations spanning multiple sectors and jurisdictions. For nearly 80% of respondents, data plays a role in their organisations’ commercial strategy, and over 66% have a data governance strategy in place.

|

Given the proliferation of data, organisations need to find ways to manage and use data effectively in a targeted way. |

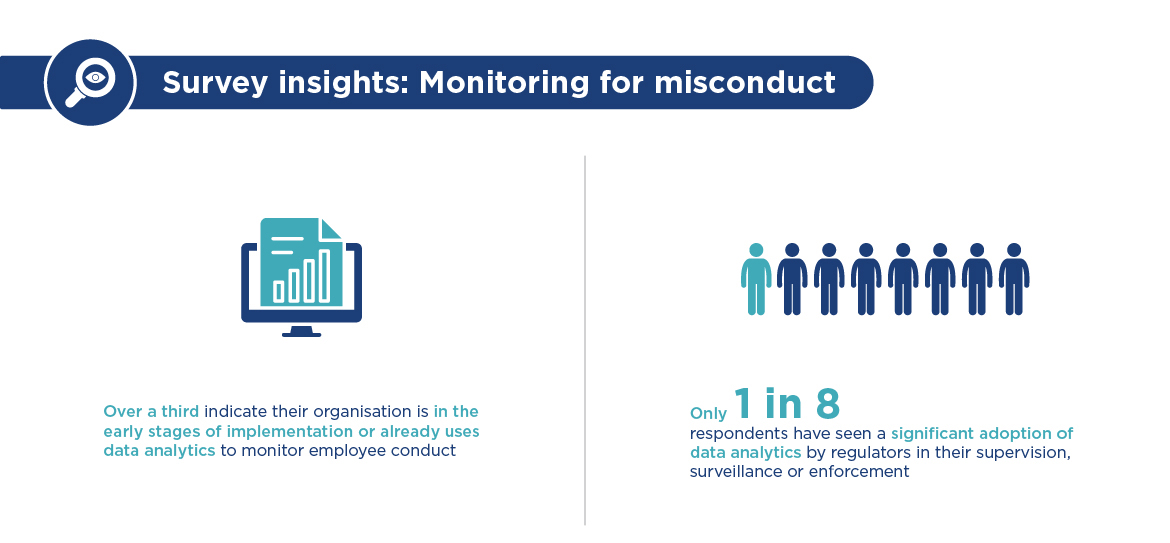

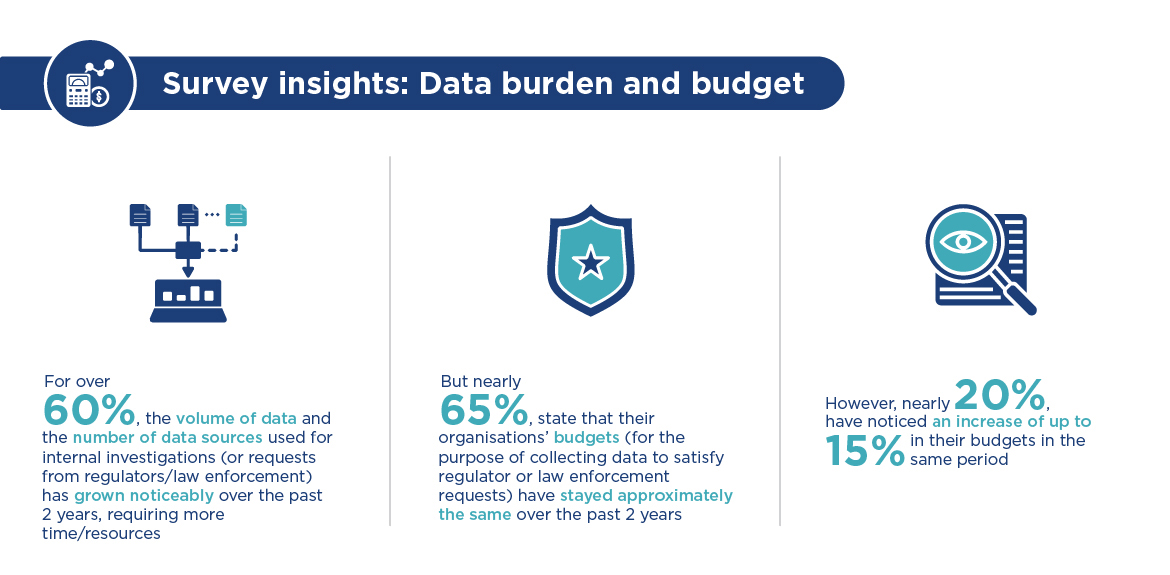

The research found that data analytics and artificial intelligence (AI) are being used primarily to improve efficiency and operations as well as corporate strategy. However, there was less of a focus on how data could be used to proactively monitor compliance or more efficiently meet regulatory requests and expectations. For instance, only 8% of organisations are relying on internally generated data to monitor or supervise their employees. This may indicate that the private sector is at risk of failing to prioritise modernising compliance and investigations functions at a time when regulators are becoming increasingly technologically sophisticated.

Hong Kong partner Jeremy Birch comments on this trend, “There is a lot of excitement around the automation of monitoring activities. What used to be a manual, and a very labour intensive process, is becoming less so. However, while the introduction of technology is changing the playing field, data quality, for some organisations, may still be preventing advancement.

“One in five (18%) respondents report having concerns over the quality of the organisation’s data. While respondents didn’t note this as their main concern, it still demonstrates that not all organisations have the right data sources to implement monitoring for misconduct in a meaningful way.”

Hong Kong senior consultant Tess Lumsdaine agrees that the sophistication of monitoring systems and issues around how data is collected and used, is part of the problem. Corporate goliaths such as big tech, as well as many governments, may have the resources to collect and analyse massive amounts of data. But as Lumsdaine observes, “We are not seeing that kind of sophistication in the private sector in relation to employee monitoring.”

Many private organisations do not have access to data, or the quality of data they have isn’t sufficient to conduct sophisticated analytics with useful output. An increase in resources may help to manage this, however businesses ultimately need to consider what they’re trying to achieve from gathering data so they can implement the appropriate IT infrastructure to attain the data pool they need and use it in a valuable way.

Tess adds, “If a business is looking to monitor a certain type of misconduct or within a market, they may wish to focus their efforts around that, which in turn will allow them to manage the risks of complying with privacy legislation, together with considerations under employment and discrimination law.”

According to our respondents, regulators’ primary interest lies in understanding how organisations store, use, retain and transfer data. Second to this is an interest in the nature of data held by organisations (whether it is harmful or shared with third parties) and the protection of data (including breaches) and privacy.

It is clear that regulators are broadening their focus, as London partner Kate Meakin identifies, “Organisations which are in the regulated sector are used to having positive obligations in respect of the misconduct that they identify and how they deal with it, and that regulator interest is only expanding. For example, in the UK the financial conduct authority has broadened its scope to also focus on non-financial misconduct in addition to the other financial misconduct they’ve always historically looked at and prioritised. But even in the non-regulated sector, some of the legal developments in the UK are leading towards more active monitoring, rather than reacting to issues once they arise.”

Uncertainty around regulatory requirements may be impeding the use of big data for compliance monitoring, so it’s no surprise that 50% of survey respondents cited regulation as a challenge around the use of big data and AI, though 75% believe the development and use of AI should be subject to regulation.

“If we look at the regulatory landscape (around big data) it is still quite immature,” notes Jeremy. “This is seeing large organisations proceed cautiously even though this could slow the development of AI and data analytics applications.”

Organisations are focusing on the benefits of using data to gain commercial advantage, with almost 80% of respondents affirming that data features in their current commercial strategy. “A focus on the commercial applications of big data is not without upsides for compliance, as this will ultimately improve the data quality within the organisation which, over time, will also allow the data to be used for compliance purposes,” says Jeremy.

According to respondents, data analytics or artificial intelligence is not only used to improve efficiency and operations, but also features heavily in research and reporting, and for data collection, analysis and business strategy. Interestingly, however, data analytics or AI does not appear to be used much for investigations or for surveillance purposes.

That said, not all organisations are lagging when it comes to using big data and AI to monitor for misconduct. Some sectors are ahead of the curve, and Sydney partner Peter Jones believes the tech sector as well as financial services may be chief among them. He explains, “The technology sector, unsurprisingly, is handling big data well. The financial services sector is also at the vanguard. In jurisdictions such as Australia this is partly being driven by the massive degree of media and political scrutiny around the major banks.”

Developments in technology continue to provide new challenges for existing privacy legislation, and organisations can face a fine line between using data to monitor for misconduct and breaching privacy requirements. For multinational organisations, this can be a particularly complex area.

Kate explains, “Business leaders are aware of data laws, privacy laws – and their employees’ rights. Organisations are grappling with these sorts of issues, especially when they operate internationally, where there are often subtle variations in regulations as well as local expectations and norms.”

These variations mean a one-size-fits-all approach is inappropriate. As Melbourne privacy specialist Kaman Tsoi notes, “particular monitoring activities may raise issues under data protection laws, surveillance laws, telecommunications laws, cybercrime laws, industry-specific requirements, or a combination of these.” Organisations need to consider what they are aiming to achieve, and address the types of monitoring undertaken in relation to the risks the business faces. “It’s not a tick-box exercise,” says Kate. “Organisations should determine for new initiatives and on a periodic basis the risks present in their activities either because of who they are dealing with, or which countries and sector they operate in, and then try to mitigate those risks.” Risk assessment processes such as data protection impact assessments can be useful, and will sometimes be legally required.”

Kate adds that it is critical for organisations to target resources effectively when it comes to the collection and management of big data. The key is to find a balance between collecting large volumes of data with no particular end goal, versus looking too narrowly.

AI tools can process tremendous amounts of data very quickly. And there is a legal position to say that organisations should be able to use big data to monitor employee actions. But as Peter observes, “There is also a moral or social dimension. It comes back to the argument that just because an organisation can do something, doesn’t mean it should.”

Summing up the situation, Jeremy says, “Disclosure and consent typically forms the basis of most privacy regimes. In the era of big data, there is a shift toward focusing on how that data is being used – and if it is being used in an ethical way.”

Jeremy predicts that:

The contents of this publication are for reference purposes only and may not be current as at the date of accessing this publication. They do not constitute legal advice and should not be relied upon as such. Specific legal advice about your specific circumstances should always be sought separately before taking any action based on this publication.

© Herbert Smith Freehills 2024

We’ll send you the latest insights and briefings tailored to your needs