Stay in the know

We’ll send you the latest insights and briefings tailored to your needs

While regulation of the internet in the UK has (up until recently) been light-touch, the last decade has exposed countless examples of the harmful impact of online content and has highlighted the need for greater regulation in this area.

In a bid to make the UK 'one of the safest places to be on the internet', the UK Government has put together a new piece of sweeping legislation, known as the Online Safety Bill to confront the question of what one can and cannot say online and the role that internet service providers will play as arbiters of this debate.

The lengths to which internet service providers can (or even should) be required to go in order to improve online safety remains one of the greatest challenges and uncertainties arising from this legislation and will be one of the key areas of focus as the Bill moves through the legislative process.

The Bill seeks to provide a robust framework for regulating harmful content (such as hate speech, cyber-bullying, misinformation, targeted advertising and the use of algorithms and automated decision-making) on the internet and is expected to set a global benchmark for online regulation going forward. Since its introduction, the Bill has attracted a great deal of publicity and has been scrutinised by a Joint Select Committee which has taken oral evidence from key industry players such as Facebook, Twitter, Google, TikTok and Facebook whistle blower, Frances Haugen.

Among the more controversial aspects of the Bill are the measures targeted at content that is deemed to be harmful but is not actually illegal. The Bill proposes a range of new duties of care for regulated service providers (see the FAQs for more information on who the Bill applies to) and these include duties for providers of services likely to be accessed by children and so-called "Category 1 services" (which is expected to cover the largest, most popular social media sites):

The Bill includes more onerous obligations in relation to higher-risk content which the the Secretary of State may in the future designate (following consultation with Ofcom) as "primary priority content" or "priority content" (e.g. a requirement to use proportionate systems and processes to prevent children encountering primary priority content).

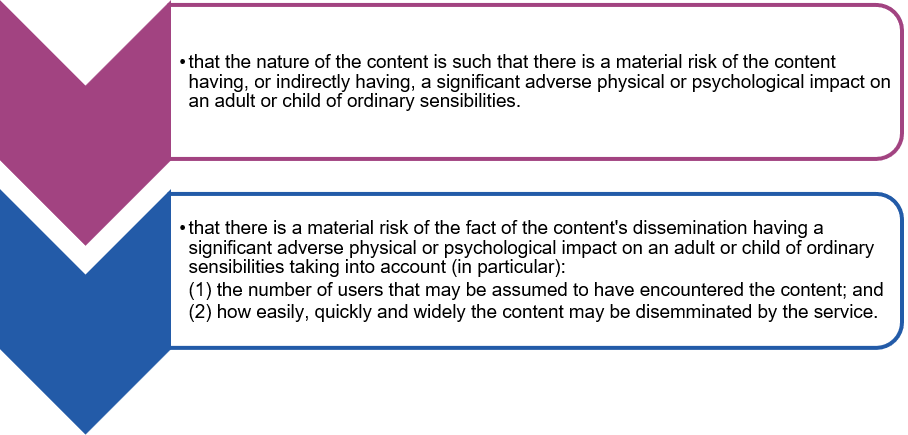

According to the explanatory notes to the Bill, harmful content could range from online bullying and abuse, to advocacy of self-harm, to spreading disinformation and misinformation. In addition to the designated categories of higher-risk content as mentioned above, the Bill contains a legislative test as part of the definition of harmful content. A regulated service provider would need to comply with the duties set out above in relation to all content in respect of which the provider has reasonable grounds to believe any of the following:

The Bill further clarifies that in applying the first test in relation to content that can reasonably be assumed to particularly affect people with a certain characteristic (or combination of characteristics), or to particularly affect a certain group of people, the provider must assume that the hypothetical adult or child possesses that characteristic (or combination of characteristics), or is a member of that group (as the case may be).

The biggest criticism of this definition of harmful content contained in the Bill is that the tests set out above are too vague for social media companies to apply in practice. For example, in its evidence before the Joint Committee, Google noted that although YouTube already prohibits hate speech, detecting it on a global stage is challenging due to the relevance of the context in which it is spoken. Twitter's Head of Public Policy in the UK, Katy Minshall, also noted to the press that the Bill does not go far enough in defining this category.

In her evidence, the Culture Secretary Nadine Dorries welcomed recommendations from the Joint Committee that might help to make the definition more watertight and noted that the Department for Culture, Media and Sport (DCMS) was considering the Law Commission's recommendations on defining new harm-based communication offences in its Report on Communication Offences. The Law Commission has explained that the proposed recommendations are intended to ensure that speech that is genuinely harmful does not escape criminal sanction merely because it does not fit within one of the proscribed categories under existing communications legislation. At the same time, the recommendations will ensure a context-based assessment of speech so that that communication that is not harmful is not restricted simply because it could be described as falling into one of the existing categories such as being grossly indecent or offensive.

Stakeholders are understandably concerned about the risks posed to freedom of speech and expression by harmful content rules which may be perceived as overly vague or broad. Adding to this concern, Category 1 service providers will also be required to balance their obligations in relation to harmful content with a separate obligation to protect information of democratic importance and journalistic content. Without additional clarity on what is and is not harmful content, this may prove to be a difficult balancing act and one which is likely to place a much greater burden on online service providers as the moderators of online content than they may have anticipated or be equipped to accommodate.

It is clear that regulated service providers will heavily rely on the use of AI to facilitate monitoring and takedown of problematic content in order to comply with the Bill. However, several stakeholders have questioned the adequacy of algorithmic moderation to recognise the nuance and subtleties required to effectively identify harmful content without encroaching on freedom of speech and expression. For instance, an algorithm may flag speech that is satire or is intended to raise positive awareness about an issue such as prevention of suicide. As an example, TikTok explained in its evidence to the Joint Committee that "when it comes to harm… getting rid of most of the stuff is straightforward; it is the bit where nuance and context are required and how you do that at massive scale, which is difficult".

The Department for Digital, Culture, Media & Sport explained in its evidence to the Joint Committee, that the Bill's focus is entirely on systems and processes rather than reviewing individual pieces of content. According to the DCMS, this will ensure that the regime stays relevant to the growing number of harms and does not overburden companies leading to over-removal of content. In its evidence to the Joint Committee, Twitter encouraged this "safety by design" approach while flagging that the regulations should remain mindful of the technological barriers that exist in deploying safety tools. It noted that content moderation technologies continue to exist in silos and are most effectively used by big players operating on a large scale. Twitter added that ensuring that a range of service providers can access these technologies (as well as the underpinning data through robust information sharing channels) will be crucial to effectively address harmful content.

Notably, the Bill creates a complaint mechanism for users if they feel that their content has been unfairly removed. In her evidence to the Joint Committee, ICO Commissioner Elizabeth Denham has cautioned against the overwhelming number of individual complaints that could be received if adequate accountability safeguards, especially with respect to algorithmic or AI-based moderation, are not put into place by regulated service providers. If a regulated service provider implements a system that ultimately leads to under-flagging or over-flagging of content, it is unclear how this will be treated by Ofcom from a compliance perspective when it comes to enforcement of the Bill.

Given the issues set out above, the Joint Committee is likely to carry out a robust review of the harmful content regime and it would not be surprising to see substantive revisions to this regime both before the Bill is formally introduced to Parliament and as the Bill makes its journey through the legislative process. Even after the Bill comes into force, the full picture (particularly when it comes to the practical and operational realities of this regime) will only become clear as and when secondary legislation and guidance are made available.

Whilst the draft legislation is still very much in a state of flux, there are some practical steps that regulated service providers can take in readiness for the legislation. For example service providers within the scope of the legislation may wish to:

Given that the EU's own online safety regime (in the form of the proposed Digital Services Act and Digital Markets Act) is also being developed in parallel, there may be ways for companies who fall within the scope of both the UK and EU legislation to reduce their compliance burden by responding in lock-step with both initiatives.

The contents of this publication are for reference purposes only and may not be current as at the date of accessing this publication. They do not constitute legal advice and should not be relied upon as such. Specific legal advice about your specific circumstances should always be sought separately before taking any action based on this publication.

© Herbert Smith Freehills 2024

We’ll send you the latest insights and briefings tailored to your needs